GenAI Daily - January 27, 2026: Microsoft's Maia 200 Chip, Intel's Core Ultra Series 3 Launch, Enterprise AI Shift to Pragmatism

Top Story

Microsoft Unveils Maia 200: Second-Generation AI Chip Challenges NVIDIA's Dominance

Microsoft announced the next generation of its artificial intelligence chip, a potential alternative to leading processors from Nvidia

, with

the chip offering 30% higher performance than alternatives for the same price

.

Microsoft is outfitting its U.S. Central region of data centers with Maia 200 chips, and they'll arrive at the U.S. West 3 region after that, with additional locations to follow. The chips use Taiwan Semiconductor Manufacturing Co.'s 3 nanometer process

.

The strategic implications run deeper than performance specs.

They rely on Ethernet cables, rather than the InfiniBand standard. Nvidia sells InfiniBand switches following its 2020 Mellanox acquisition

, signaling Microsoft's intent to reduce dependency on NVIDIA's ecosystem.

Cloud providers face surging demand from generative AI model developers such as Anthropic and OpenAI and from companies building AI agents and other products on top of the popular models. Data center operators and infrastructure providers are trying to increase their computing prowess while keeping power consumption in check

.

Why it matters: This move validates the broader industry shift toward custom silicon and signals intensifying competition in AI compute infrastructure, potentially giving enterprise buyers more leverage in cloud pricing negotiations.

Key Developments

Intel Core Ultra Series 3 Goes on Sale Today

Intel® Core™ Ultra Series 3 goes on sale January 27, enabling the best battery life of any generation of Intel-powered AI PC. At CES 2026, Intel launched its Intel® Core™ Ultra Series 3 processors, marking the debut of the first AI PC platform built on Intel's 18A process technology

.

Intel Core Ultra Series 3 delivers competitive advantages in critical edge AI workloads with up to 1.9x higher large language model (LLM) performance, up to 2.3x better performance per watt per dollar on end-to-end video analytics, and up to 4.5x higher throughput on vision language action (VLA) models. The integrated AI acceleration enables superior total cost of ownership (TCO) through a single system on chip (SoC) solution versus traditional multi-chip CPU and GPU architectures

.

Impact: Enterprises can now deploy edge AI applications with significantly better economics, particularly for video analytics and local LLM inference.

Oracle AI Database 26ai Enterprise Edition Launches for Linux

Big news for our on‑premises community: Oracle AI Database 26ai Enterprise Edition for Linux x86‑64 will be released in January 2026 as part of the quarterly Release Update (version 23.26.1). Oracle Engineering has been hard at work building a new generation of database that architects AI and Data together, and delivers AI-native data management on all the leading cloud platforms

.

Now that we have delivered Oracle AI Database on all leading clouds, we are making it available for on‑premises Linux x86-64 platforms, giving you even more choice to simplify architectures, accelerate AI‑driven development, and meet your security and performance needs no matter where your data lives

.

Impact: This gives enterprises hybrid deployment flexibility and addresses data sovereignty concerns while maintaining cloud-native AI capabilities.

2026 Enterprise AI Pivot: From Hype to Pragmatic Implementation

If 2025 was the year AI got a vibe check, 2026 will be the year the tech gets practical. The focus is already shifting away from building ever-larger language models and toward the harder work of making AI usable. In practice, that involves deploying smaller models where they fit, embedding intelligence into physical devices, and designing systems that integrate cleanly into human workflows

.

"Fine-tuned SLMs will be the big trend and become a staple used by mature AI enterprises in 2026, as the cost and performance advantages will drive usage over out-of-the-box LLMs," Andy Markus, AT&T's chief data officer, told TechCrunch

.

"The efficiency, cost-effectiveness, and adaptability of SLMs make them ideal for tailored applications where precision is paramount," said Jon Knisley, an AI strategist at ABBYY

.

Impact: Enterprise AI strategy shifts from general-purpose models to specialized, cost-effective solutions optimized for specific business functions.

Product Launches

- Intel Core Ultra Series 3: First AI PC platform on Intel 18A process with up to 1.9x LLM performance improvement - Available globally starting today (Intel)

- Oracle AI Database 26ai: Enterprise Edition for Linux x86-64 on-premises deployment - January 2026 release (Oracle)

- Microsoft Maia 200: Second-generation AI chip with 30% performance improvement over alternatives - Rolling out to U.S. Central data centers (CNBC)

Funding & Deals

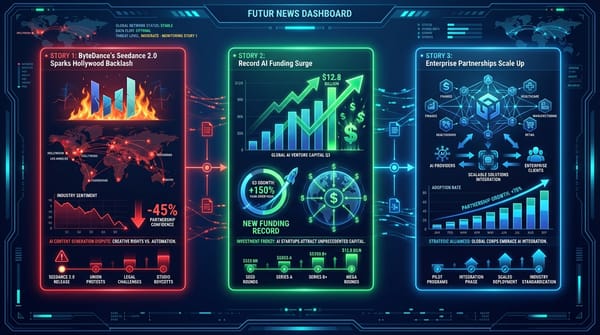

- xAI - $20B Series E: Elon Musk's AI company behind Grok raised massive funding to accelerate large-scale AI development - Led by Valor Equity Partners with strategic investors including NVIDIA and Cisco (TechCrunch)

- LMArena - $150M Series A: AI model evaluation platform reached $1.7B valuation with 5M monthly users - Led by Felicis Ventures and UC Investments (Crunchbase)

- Accenture Acquires Faculty: UK-based AI services company with decision intelligence platform - Terms undisclosed, completion subject to regulatory approval (Accenture)

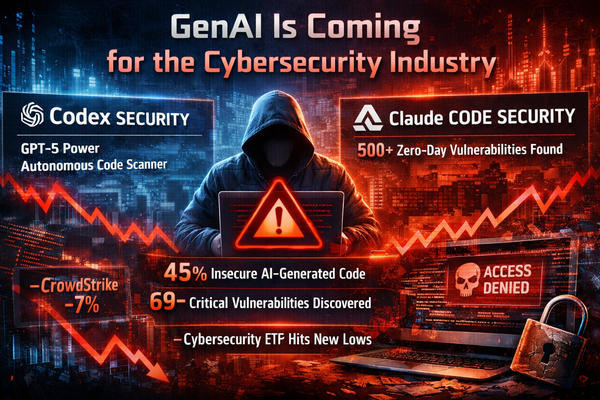

- CrowdStrike Acquires SGNL: Continuous Identity platform for AI-era security - Predominantly cash deal expected to close Q1 FY'27 (CrowdStrike)

Tomorrow's Watch List

- Watch for enterprise adoption metrics on Intel's new AI PC platform as systems hit retail channels

- Monitor Microsoft's Maia 200 deployment timeline to additional Azure regions

- Track Oracle's AI Database 26ai customer uptake in on-premises enterprise segments

Related reading: Check out this week's [Deep Insights analysis] for strategic context on these developments.