The 2026 AI Infrastructure Divide: Production-Ready vs. Pilot Purgatory

The enterprise AI gold rush just hit a wall. While vendors promised transformation, data reveals a harsh reality: only 8.6% of companies have AI agents in production. The rest? Stuck in what industry insiders call "Pilot Purgatory."

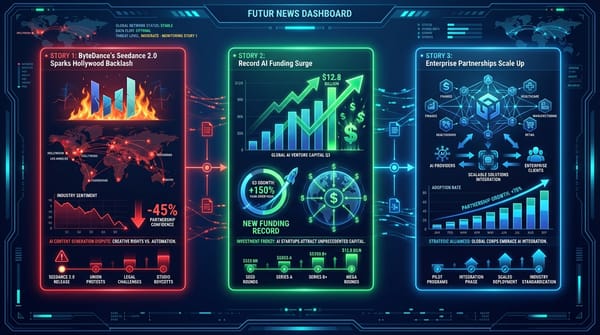

But something fundamental shifted this week. NVIDIA's Rubin platform entered production with 10x performance gains. Anthropic's Model Context Protocol became the "USB-C for AI" with backing from OpenAI, Microsoft, and Google. Meanwhile, Cyera raised $400 million at a $9 billion valuation—tripling in twelve months—because enterprises finally realized that data security isn't a nice-to-have for AI. It's the bottleneck.

Here's what the headlines missed: 2026 isn't about better models. It's about AI infrastructure. The companies building production-ready AI systems will dominate. The ones still writing checks for custom LLM development will learn this lesson the expensive way.

The divide is already visible. On one side: enterprises deploying standardized, secure, cost-effective AI through established platforms. On the other: organizations burning millions on pilots that never scale because they lack the infrastructure foundation.

This is the infrastructure era. And most CTOs are building for the wrong decade.

The Story

The Setup

For two years, the narrative was simple: build custom AI models, chase AGI capabilities, and pilot everything. Enterprise software budgets ballooned as companies hired PhD teams to fine-tune transformers and chase frontier model performance. The playbook was "experiment first, infrastructure later."

CFOs wrote the checks because everyone believed AI would magically transform business operations once the models got smart enough. The assumption: better algorithms would overcome infrastructure limitations.

The Shift

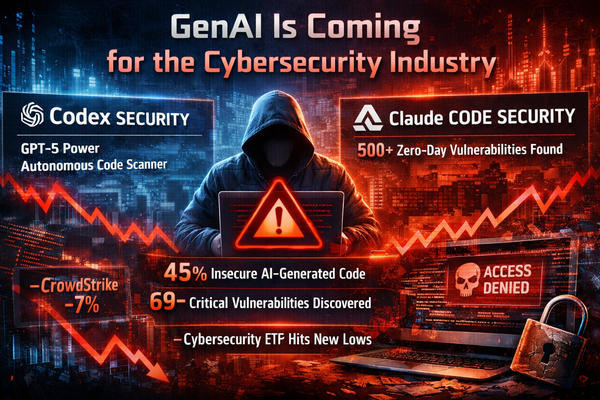

This week exposed the infrastructure reality. Cyera's $9 billion valuation reveals that data security—not model performance—has become the primary constraint on AI adoption. Only 13% of enterprises understand how AI touches their data, creating massive regulatory and business risks.

Meanwhile, industry leaders abandoned AGI promises entirely. OpenAI's Sam Altman called AGI "not a super useful term." Salesforce's Marc Benioff labeled it marketing "hypnosis." The message is clear: the future is domain-specific AI that works reliably, not general intelligence that might exist someday.

The most telling signal: MCP standardization. When OpenAI, Google, and Microsoft align on infrastructure standards, they're not competing on algorithms anymore. They're competing on who can make AI deployment easiest for enterprises.

The Pattern

This is the exact playbook that transformed cloud computing. In 2010, enterprises built custom data centers and hired armies of sysadmins. By 2015, AWS had standardized infrastructure, and custom deployments became legacy liabilities.

The same shift is happening now. DeepSeek's R1 model delivers GPT-4 performance at 50% of OpenAI's cost using open source. Chinese models are democratizing advanced capabilities. Custom model development is becoming an expensive distraction from solving real business problems.

Winners are emerging: companies that nail data governance (Cyera), standardized integration (MCP adopters), and cost-effective deployment (small model specialists). Losers: organizations still burning budgets on bespoke AI architectures.

The Stakes

The window is closing fast. NVIDIA's Rubin platform ships in H2 2026 with 10x performance gains, creating a new tier of infrastructure capabilities. Companies that adapt to standardized AI infrastructure will access this next generation. Those stuck in custom development cycles won't.

More importantly, regulatory pressure is intensifying. EU, UK, and multiple national authorities are investigating AI governance failures. The data security requirements that made Cyera worth $9 billion will become mandatory compliance baselines within 18 months.

The cost of waiting: enterprises that don't standardize on production-ready AI platforms by Q3 2026 will face 3x higher deployment costs and 12-month delays as they rebuild foundations.

What This Means For You

For CTOs

Stop custom model development immediately. Redirect those budgets toward data security and MCP-compatible platforms. By March 2026, evaluate every AI vendor on infrastructure readiness, not model performance. Your Q2 priority: implement comprehensive data governance for AI systems—this is now table stakes, not competitive advantage.

Standardize on MCP-compatible tools. Any AI platform that doesn't support Model Context Protocol will become a legacy liability within 12 months. Start requiring MCP support in all new AI vendor evaluations.

Budget shift required: Move 60% of AI spend from model development to infrastructure and security by Q4 2026. Companies that don't make this shift will pay 3x more for the same capabilities.

For AI Product Leaders

Abandon the custom model strategy. Your competitive advantage isn't in training better transformers—it's in domain-specific applications using standardized models. Focus product development on workflow integration, not algorithmic innovation.

Partner with infrastructure leaders. Companies like Cyera that solve data governance will become mandatory dependencies. Build partnerships now or face integration complexity later.

Price for infrastructure reality. DeepSeek's 50% cost advantage over OpenAI will become the new baseline. Adjust pricing models to compete with open source alternatives.

For Engineering Leaders

Hire for infrastructure, not algorithms. Stop recruiting ML PhD teams. Start hiring engineers who understand MCP, data lineage, and production AI security. The skillset shift is dramatic and immediate.

Redesign for standardization. Any custom AI infrastructure you're building today will be obsolete by 2027. Design systems around standard APIs and open protocols, not proprietary architectures.

Security-first development. Implement AI data governance in every new system. This isn't a nice-to-have anymore—it's the only way to scale beyond pilots.

What We're Watching

By Q2 2026, we'll see major cloud providers announce MCP-native AI services, making custom integration obsolete. If Google or Microsoft launches standardized agentic workflows, expect 50% of custom AI projects to get cancelled.

Open source models will achieve GPT-4 Turbo performance at 30% of current costs by March 2026, forcing every enterprise to recalculate their AI economics. Companies still paying premium pricing for proprietary models will face board-level questions.

By Q3 2026, AI data governance will become a regulatory requirement in major markets. The EU's AI Act enforcement and potential US federal requirements will make Cyera-style platforms mandatory, not optional.

Watch for the first major AI security breach at a Fortune 500 company. When it happens—and it will—expect emergency budget reallocations toward data security platforms across the entire enterprise market.

If NVIDIA's Rubin platform delivers promised 10x improvements in H2 2026, companies without infrastructure-ready AI deployments will face a two-year capability gap that may be impossible to close.

The Bottom Line

The AI infrastructure era started this week. The question isn't whether your custom AI models will become obsolete—it's whether you'll pivot to production-ready platforms before your competitors do.

Mark this prediction: by January 2027, enterprises will be divided into two categories. Infrastructure leaders deploying standardized, secure, cost-effective AI at scale. And everyone else, still stuck in pilot purgatory, burning budgets on custom solutions that never shipped.

The playbook just flipped from "build your own AI" to "deploy AI infrastructure." Companies that recognize this shift will dominate their markets. Those that don't will spend 2027 explaining to boards why their AI initiatives cost twice as much and delivered half the results.

The infrastructure divide is here. Which side is your company on?