The $50 Billion Infrastructure Ceiling That's About to Crush Your AI Strategy

The enterprise AI gold rush just hit a wall. While everyone was busy debating AGI timelines and model capabilities, a more fundamental crisis was brewing beneath the surface. This week, the infrastructure ceiling became impossible to ignore.

Apple, the company that builds its own everything, just announced it's paying Google $1 billion annually to power Siri because building competitive AI infrastructure has become prohibitively expensive. Microsoft unveiled its second-generation Maia 200 chip, desperate to reduce its $50+ billion dependency on NVIDIA. Meanwhile, PJM Interconnection projects a six-gigawatt power shortage by 2027 — equivalent to taking six nuclear plants offline — as AI data centers consume electricity faster than grids can scale.

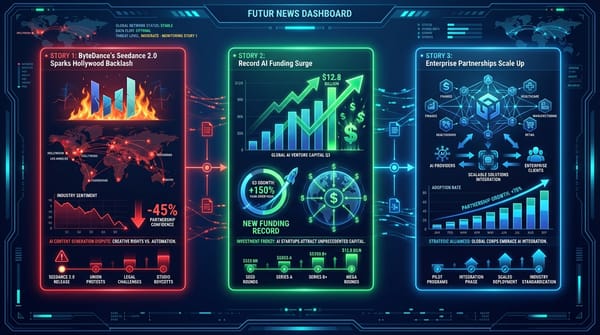

The numbers tell the story: xAI raised $20 billion, Anthropic is reportedly raising $10 billion at a $350 billion valuation, and even seed rounds like Humans& are hitting $480 million. This isn't venture capital anymore. It's infrastructure financing on a national scale.

What's happening isn't just about compute scarcity or funding concentration. It's the Great Consolidation — the moment when enterprise AI shifts from "try everything" to "pick your platform and pray you chose correctly."

The Story

The Setup

For the past two years, enterprise AI operated under the "portfolio theory." Try multiple models, run parallel pilots, keep optionality open. OpenAI for reasoning, Anthropic for safety, local models for data sensitivity. CIOs built vendor-agnostic strategies while waiting for the market to mature.

The conventional wisdom was simple: avoid vendor lock-in, maintain flexibility, let the best models win through competition.

The Shift

That strategy just became financially impossible. The infrastructure requirements for competitive AI have crossed the "$50 billion threshold" — a point where only a handful of companies can meaningfully compete.

Apple's Google partnership isn't just about technical capabilities. It's about economic reality. Building a competitive foundation model now requires:

- 500,000+ GPUs ($50+ billion in hardware)

- Multi-gigawatt data centers ($10+ billion in infrastructure)

- Specialized chips like Microsoft's Maia 200 ($5+ billion in R&D)

- Years of training time with guaranteed compute availability

Meanwhile, DeepSeek's architectural breakthrough demonstrates that efficiency gains come from research breakthroughs, not just larger models. Their "Manifold-Constrained Hyper-Connections" approach reduces training instability while scaling — the kind of innovation that requires deep technical expertise, not just capital.

The Pattern

We've seen this movie before. Cloud computing followed the identical trajectory from 2008-2014. Early adopters experimented with multiple providers, maintained hybrid strategies, and built vendor-agnostic architectures. Then economics kicked in. By 2015, enterprise buyers were forced to choose AWS, Azure, or Google Cloud because running multi-cloud became prohibitively complex and expensive.

The same consolidation is happening in AI, but accelerated. Unlike cloud infrastructure that evolved over decades, AI compute requirements are doubling every six months while power grid expansion takes years.

The enterprise survey data confirms this shift. KPMG reports 67% of companies will maintain AI spending even in a recession, but they're cutting overlapping solutions and focusing on "winners." Enterprise buyers are moving from experimentation to consolidation faster than anyone predicted.

The Stakes

By Q3 2026, enterprises will face a binary choice: commit to one of the three or four remaining AI platforms, or get priced out of competitive AI capabilities entirely.

Companies still running "AI shopping" strategies will find themselves negotiating from weakness as platform providers prioritize committed customers during compute allocation crunches. Those who pick wrong will face migration costs that make ERP replacements look trivial.

The window for platform-agnostic strategies is closing faster than most enterprises realize.

What This Means For You

For CTOs

Stop shopping, start committing. By March, identify your primary AI platform (OpenAI/Microsoft, Google, or Anthropic) and negotiate multi-year compute commitments. The days of month-to-month AI experimentation are ending.

Audit your AI portfolio now. If you're running more than two foundation model providers in production, consolidate by Q2. The overhead costs will become unsustainable as platform providers optimize for committed customers.

Plan for vertical specialization. The OpenAI Healthcare launch signals the shift from general-purpose to industry-specific models. Budget for specialized vertical models rather than trying to adapt general models to your domain.

For AI Product Leaders

Abandon the vendor-agnostic strategy. Your competitive advantage will come from deeper platform integration, not platform flexibility. Companies that go "all-in" on a single platform will get earlier access to new capabilities and better pricing.

Bet on efficiency, not scale. DeepSeek's architectural breakthrough proves that model efficiency matters more than parameter count. Partner with platforms that prioritize inference cost and latency over marketing-friendly model sizes.

Focus on workflow integration, not model switching. The Model Context Protocol is becoming standard infrastructure. Build your AI strategy around workflow integration rather than model comparison.

For Engineering Leaders

Hire for platform-specific expertise. Generalist AI engineers are becoming less valuable than specialists who understand specific platforms deeply. If you're betting on OpenAI, hire former OpenAI engineers. If Google, hire ex-Google engineers.

Redesign for inference efficiency. With compute becoming scarce, architectural decisions around batching, caching, and edge deployment will determine your AI economics. Plan infrastructure assuming 3-5x cost increases for compute.

Build for platform lock-in. Stop building abstraction layers that hide platform-specific optimizations. Your competitive advantage will come from platform-native implementations, not portable code.

What We're Watching

By Q2 2026: Enterprise AI procurement shifts from RFPs to allocation requests as compute scarcity forces rationing among platform providers.

If power grid constraints persist: Expect geographic AI inequality as data centers concentrate in regions with power availability, forcing enterprises to accept latency trade-offs.

By Q4 2026: The three-platform oligopoly crystallizes (OpenAI/Microsoft, Google, Anthropic) with everyone else relegated to specialized niches or acquired by platform players.

If efficiency breakthroughs continue: DeepSeek-style architectural improvements could disrupt the capital intensity thesis, but only for companies with world-class AI research teams.

Watch for enterprise AI "hostage situations": Companies that over-invested in single platforms will struggle with pricing negotiations as switching costs become prohibitive.

The Bottom Line

The enterprise AI market just shifted from "try before you buy" to "commit or get cut off." While everyone was debating model capabilities, the infrastructure economics created a winner-take-all dynamic that most enterprises aren't prepared for.

The companies that recognize this shift and make platform commitments in Q1 2026 will have negotiating leverage and compute allocation priority. Those who wait for perfect information will find themselves begging for scraps in a seller's market.

Mark my words: by January 2027, we'll look back at this week as the moment when enterprise AI choice became enterprise AI dependency. The question isn't which platform will win — it's whether you'll secure your seat before the music stops.