When Your AI Should Write Code, Not Call Functions

Most AI agents don’t need this.

If your agent has a clear job and a handful of tools — answer customer questions, review documents, analyze data — the standard approach works fine. The AI knows what it can do, picks the right action, does it. Simple.

But some agents face different challenges:

Too many tools. The agent connects to everything — Salesforce, Slack, Google Drive, your databases, internal systems. Hundreds of possible actions. The AI spends more energy figuring out what it could do than actually doing anything useful.

Vague jobs. Instead of “handle support tickets,” the mandate is “look across our systems and figure out what needs doing.” The agent can’t know ahead of time which tools it’ll need.

For these situations, there’s a workaround worth knowing.

The Problem with “Connect to Everything”

New standards made it easy to plug AI agents into all your business systems. One integration approach, thousands of tools available. Sounds great.

Here’s what vendors don’t mention.

Every tool the agent might use needs to be explained upfront. What it does, what information it needs, what it returns. Connect 50 systems with 10 actions each, and the AI is digesting 500 instruction manuals before it even sees what you’re asking for.

That’s slow. And expensive.

There’s also the data problem. Say you want to move meeting notes from Google Drive into a Salesforce record. The AI reads the document, holds it in working memory, then writes it to Salesforce. Long documents pass through the AI twice. Complex workflows hit limits and fail.

A Different Approach

Cloudflare and Anthropic independently landed on the same insight: AI is much better at writing code than at following tool-calling instructions.

Why? Training. AI models have seen billions of lines of real code. Tool-calling formats are artificial — limited examples, unfamiliar structure.

Cloudflare put it well: asking an AI to use synthetic tool formats is like teaching Shakespeare Mandarin for a month, then asking him to write a play in it. Not his best work.

The results speak for themselves:

- Anthropic: 98% less processing overhead

- Cloudflare: 81% faster on complex tasks

- Research studies: 20% higher success rates

Two Tools Instead of Hundreds

Instead of explaining every possible action upfront, give the agent two simple capabilities:

1. Look things up. When the agent needs to work with Salesforce, it searches for the relevant instructions. Google Drive? Looks that up too. Context7 does exactly this — it gives AI agents access to up-to-date documentation on demand. The agent only learns about tools when it actually needs them.

2. Write and run scripts. Instead of calling tools one at a time, the agent writes a small program that handles the whole job, then runs it.

Your systems still exist. Salesforce, Slack, everything. But instead of 500 instruction manuals loaded upfront, the agent looks up what it needs, writes code to get the job done, and executes it.

Data moves directly between systems. Those meeting notes go straight from Drive to Salesforce without bouncing through the AI twice.

Not New, Just Newly Relevant

Researchers figured this out in early 2024. A paper called “CodeAct” showed AI agents perform better writing code than using structured commands. The findings were solid.

What’s changed is scale. Thousands of integrations now exist. Companies are connecting agents to dozens of internal systems. The “too many tools” problem moved from academic research to everyday headache.

When This Helps

- Agents that work across many systems without predictable patterns

- High-volume operations with multiple steps

- Flexible automation where the agent figures out its own tasks

When It Doesn’t

- Agents with clear jobs and stable toolsets — just use regular tool calling

- Predictable workflows — simpler to build the traditional way

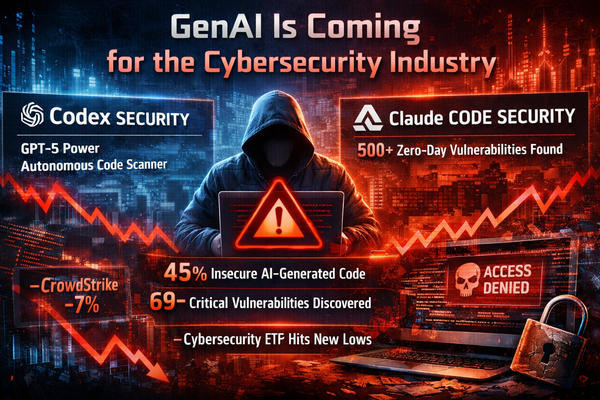

- Environments where running AI-generated code isn’t safe or practical

The Tradeoff

Complexity moves, it doesn’t vanish. Instead of managing tool descriptions, you’re managing code execution. Different problems to solve, different skills needed.

For agents drowning in tools or facing open-ended jobs, it’s worth it. For focused agents with defined roles, it’s unnecessary overhead.

Bottom Line

Most agents don’t need to write code. They have clear jobs and manageable toolsets.

But if your agent connects to many systems, or has a loosely-defined role requiring flexibility, this pattern helps. Context7 for looking up what’s needed, secure code execution for running it, and your integrations become infrastructure instead of overhead.

Match the solution to your actual problem.

Learn more: